#!pip install ANNarchyHomeostatic STDP: SORF model

Reimplementation of the SORF model published in:

Carlson, K.D.; Richert, M.; Dutt, N.; Krichmar, J.L., “Biologically plausible models of homeostasis and STDP: Stability and learning in spiking neural networks,” in Neural Networks (IJCNN), The 2013 International Joint Conference on , vol., no., pp.1-8, 4-9 Aug. 2013. doi: 10.1109/IJCNN.2013.6706961

import numpy as np

import matplotlib.pyplot as plt

import ANNarchy as ann

from tqdm import tqdmANNarchy 5.0 (5.0.0) on linux (posix).Hyperparameters:

nb_neuron = 4 # Number of exc and inh neurons

size = (32, 32) # input size

freq = 1.2 # nb_cycles/half-image

nb_stim = 40 # Number of grating per epoch

nb_epochs = 20 # Number of epochs

max_freq = 28. # Max frequency of the poisson neurons

T = 10000. # Period for averaging the firing rateNeuron type:

# Izhikevich Coba neuron with AMPA, NMDA and GABA receptors

RSNeuron = ann.Neuron(

parameters = dict(

a = 0.02,

b = 0.2,

c = -65.,

d = 8.,

tau_ampa = 5.,

tau_nmda = 150.,

tau_gabaa = 6.,

tau_gabab = 150.,

vrev_ampa = 0.0,

vrev_nmda = 0.0,

vrev_gabaa = -70.0,

vrev_gabab = -90.0,

) ,

equations = [

# Inputs

ann.Variable("""

I = g_ampa * (vrev_ampa - v) + g_nmda * nmda(v, -80.0, 60.0) * (vrev_nmda -v) + g_gabaa * (vrev_gabaa - v) + g_gabab * (vrev_gabab -v)

"""),

# Midpoint scheme

ann.Variable("dv/dt = (0.04 * v + 5.0) * v + 140.0 - u + I", init=-65., min=-90., method='midpoint'),

ann.Variable("du/dt = a * (b*v - u)", init=-13., method='midpoint'),

# Conductances

ann.Variable("tau_ampa * dg_ampa/dt = -g_ampa", method='exponential'),

ann.Variable("tau_nmda * dg_nmda/dt = -g_nmda", method='exponential'),

ann.Variable("tau_gabaa * dg_gabaa/dt = -g_gabaa", method='exponential'),

ann.Variable("tau_gabab * dg_gabab/dt = -g_gabab", method='exponential'),

],

spike = "v >= 30.",

reset = """

v = c

u += d

g_ampa = 0.0

g_nmda = 0.0

g_gabaa = 0.0

g_gabab = 0.0

""",

functions = """

nmda(v, t, s) = ((v-t)/(s))^2 / (1.0 + ((v-t)/(s))^2)

""",

refractory=1.0

)Synapse:

# STDP with homeostatic regulation

homeo_stdp = ann.Synapse(

parameters=dict(

# STDP

tau_plus = 60.,

tau_minus = 90.,

A_plus = 0.000045,

A_minus = 0.00003,

# Homeostatic regulation

alpha = 0.1,

beta = 50.0, # <- Difference with the original implementation

gamma = 50.0,

Rtarget = 10.,

T = 10000.,

),

equations = [

# Homeostatic values

ann.Variable("R = post.r", locality='semiglobal'),

ann.Variable("K = R/(T * (1. + fabs(1. - R / Rtarget) * gamma))", locality='semiglobal'),

# Nearest-neighbour

ann.Variable("stdp = if t_post >= t_pre: ltp else: - ltd"),

ann.Variable("w += (alpha * w * (1- R/Rtarget) + beta * stdp ) * K", min=0.0, max=10.0),

# Traces

ann.Variable("tau_plus * dltp/dt = -ltp", method="exponential"),

ann.Variable("tau_minus * dltd/dt = -ltd", method="exponential"),

],

pre_spike="""

g_target += w

ltp = A_plus

""",

post_spike="ltd = A_minus"

)Network:

# Network

net = ann.Network()

# Input population

OnPoiss = net.create(ann.PoissonPopulation(size, rates=1.0))

OffPoiss = net.create(ann.PoissonPopulation(size, rates=1.0))

# RS neuron for the input buffers

OnBuffer = net.create(size, RSNeuron)

OffBuffer = net.create(size, RSNeuron)

# Connect the buffers

OnPoissBuffer = net.connect(OnPoiss, OnBuffer, ['ampa', 'nmda'])

OnPoissBuffer.one_to_one(ann.Uniform(0.2, 0.6))

OffPoissBuffer = net.connect(OffPoiss, OffBuffer, ['ampa', 'nmda'])

OffPoissBuffer.one_to_one(ann.Uniform(0.2, 0.6))

# Excitatory and inhibitory neurons

Exc = net.create(nb_neuron, RSNeuron)

Inh = net.create(nb_neuron, RSNeuron)

Exc.compute_firing_rate(T)

Inh.compute_firing_rate(T)

# Input connections

OnBufferExc = net.connect(OnBuffer, Exc, ['ampa', 'nmda'], homeo_stdp)

OnBufferExc.all_to_all(ann.Uniform(0.004, 0.015))

OffBufferExc = net.connect(OffBuffer, Exc, ['ampa', 'nmda'], homeo_stdp)

OffBufferExc.all_to_all(ann.Uniform(0.004, 0.015))

# Competition

ExcInh = net.connect(Exc, Inh, ['ampa', 'nmda'], homeo_stdp)

ExcInh.all_to_all(ann.Uniform(0.116, 0.403))

ExcInh.Rtarget = 75.

ExcInh.tau_plus = 51.

ExcInh.tau_minus = 78.

ExcInh.A_plus = -0.000041

ExcInh.A_minus = -0.000015

InhExc = net.connect(Inh, Exc, ['gabaa', 'gabab'])

InhExc.all_to_all(ann.Uniform(0.065, 0.259))

net.compile()Compiling network 1... OK # Inputs

def get_grating(theta):

x = np.linspace(-1., 1., size[0])

y = np.linspace(-1., 1., size[1])

xx, yy = np.meshgrid(x, y)

z = np.sin(2.*np.pi*(np.cos(theta)*xx + np.sin(theta)*yy)*freq)

return np.maximum(z, 0.), -np.minimum(z, 0.0)

# Initial weights

w_on_start = OnBufferExc.w

w_off_start = OffBufferExc.w

# Monitors

m = net.monitor(Exc, 'r')

n = net.monitor(Inh, 'r')

o = net.monitor(OnBufferExc[0], 'w', period=1000.)

p = net.monitor(ExcInh[0], 'w', period=1000.)

# Learning procedure

from time import time

import random

tstart = time()

stim_order = list(range(nb_stim))

for epoch in tqdm(range(nb_epochs)):

random.shuffle(stim_order)

for stim in stim_order:

# Generate a grating randomly

rates_on, rates_off = get_grating(np.pi*stim/float(nb_stim))

# Set it as input to the poisson neurons

OnPoiss.rates = max_freq * rates_on

OffPoiss.rates = max_freq * rates_off

# Simulate for 2s

net.simulate(2000.)

# Relax the Poisson inputs

OnPoiss.rates = 1.

OffPoiss.rates = 1.

# Simulate for 500ms

net.simulate(500.)

print('Done in ', time()-tstart)

# Recordings

datae = m.get('r')

datai = n.get('r')

dataw = o.get('w')

datal = p.get('w') 25%|████████████████████▌ | 5/20 [00:50<02:32, 10.15s/it]WARNING: The recording of monitors might consume 84MiB, i.e. more then 10% of your currently available memory.

WARNING: Please note, that estimate considers only recorded state variables. Other attributes, such as spike recordings, are not included.

WARNING: The recording of monitors might consume 85MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 86MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 87MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 88MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 89MiB, i.e. more then 10% of your currently available memory. 30%|████████████████████████▌ | 6/20 [01:00<02:22, 10.18s/it]WARNING: The recording of monitors might consume 90MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 91MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 92MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 93MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 94MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 95MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 96MiB, i.e. more then 10% of your currently available memory. 35%|████████████████████████████▋ | 7/20 [01:11<02:12, 10.17s/it]WARNING: The recording of monitors might consume 97MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 98MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 99MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 100MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 101MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 102MiB, i.e. more then 10% of your currently available memory. 40%|████████████████████████████████▊ | 8/20 [01:21<02:02, 10.17s/it]WARNING: The recording of monitors might consume 103MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 104MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 105MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 106MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 107MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 108MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 109MiB, i.e. more then 10% of your currently available memory. 45%|████████████████████████████████████▉ | 9/20 [01:31<01:52, 10.19s/it]WARNING: The recording of monitors might consume 110MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 111MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 112MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 113MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 114MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 115MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 116MiB, i.e. more then 10% of your currently available memory. 50%|████████████████████████████████████████▌ | 10/20 [01:41<01:42, 10.23s/it]WARNING: The recording of monitors might consume 117MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 118MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 119MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 120MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 168MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 169MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 170MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 171MiB, i.e. more then 10% of your currently available memory. 55%|████████████████████████████████████████████▌ | 11/20 [01:52<01:32, 10.27s/it]WARNING: The recording of monitors might consume 172MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 173MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 174MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 175MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 176MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 177MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 178MiB, i.e. more then 10% of your currently available memory. 60%|████████████████████████████████████████████████▌ | 12/20 [02:02<01:22, 10.30s/it]WARNING: The recording of monitors might consume 179MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 180MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 181MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 182MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 183MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 184MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 185MiB, i.e. more then 10% of your currently available memory. 65%|████████████████████████████████████████████████████▋ | 13/20 [02:12<01:12, 10.30s/it]WARNING: The recording of monitors might consume 186MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 187MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 188MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 189MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 190MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 191MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 192MiB, i.e. more then 10% of your currently available memory. 70%|████████████████████████████████████████████████████████▋ | 14/20 [02:23<01:01, 10.31s/it]WARNING: The recording of monitors might consume 193MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 194MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 195MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 196MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 197MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 198MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 199MiB, i.e. more then 10% of your currently available memory. 75%|████████████████████████████████████████████████████████████▊ | 15/20 [02:33<00:51, 10.34s/it]WARNING: The recording of monitors might consume 200MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 201MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 202MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 203MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 204MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 205MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 206MiB, i.e. more then 10% of your currently available memory. 80%|████████████████████████████████████████████████████████████████▊ | 16/20 [02:43<00:41, 10.36s/it]WARNING: The recording of monitors might consume 207MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 208MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 209MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 210MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 211MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 212MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 213MiB, i.e. more then 10% of your currently available memory. 85%|████████████████████████████████████████████████████████████████████▊ | 17/20 [02:54<00:31, 10.41s/it]WARNING: The recording of monitors might consume 214MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 215MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 216MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 217MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 218MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 219MiB, i.e. more then 10% of your currently available memory. 90%|████████████████████████████████████████████████████████████████████████▉ | 18/20 [03:04<00:20, 10.39s/it]WARNING: The recording of monitors might consume 220MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 221MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 222MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 223MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 224MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 225MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 226MiB, i.e. more then 10% of your currently available memory. 95%|████████████████████████████████████████████████████████████████████████████▉ | 19/20 [03:15<00:10, 10.39s/it]WARNING: The recording of monitors might consume 227MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 228MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 229MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 230MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 231MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 232MiB, i.e. more then 10% of your currently available memory.

WARNING: The recording of monitors might consume 233MiB, i.e. more then 10% of your currently available memory. 100%|█████████████████████████████████████████████████████████████████████████████████| 20/20 [03:25<00:00, 10.28s/it]Done in 205.57833003997803# Final weights

w_on_end = OnBufferExc.w

w_off_end = OffBufferExc.w

# Plot

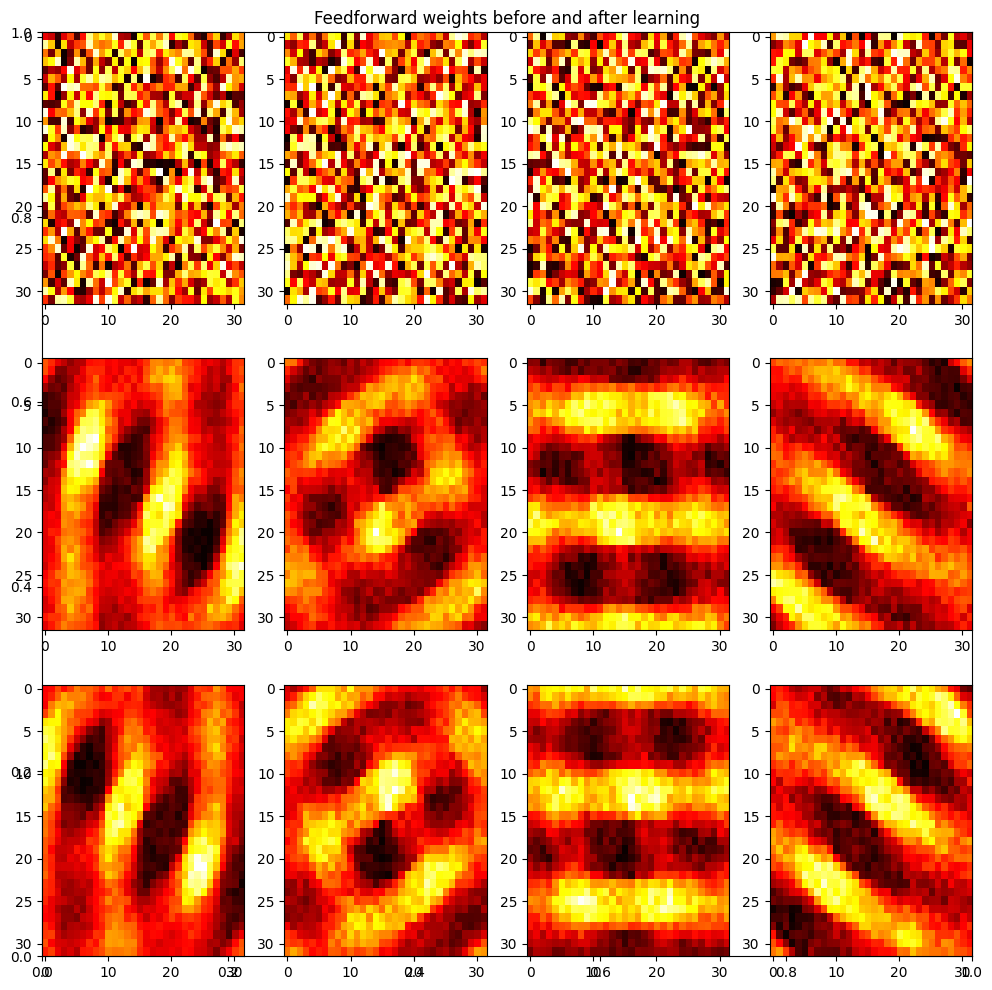

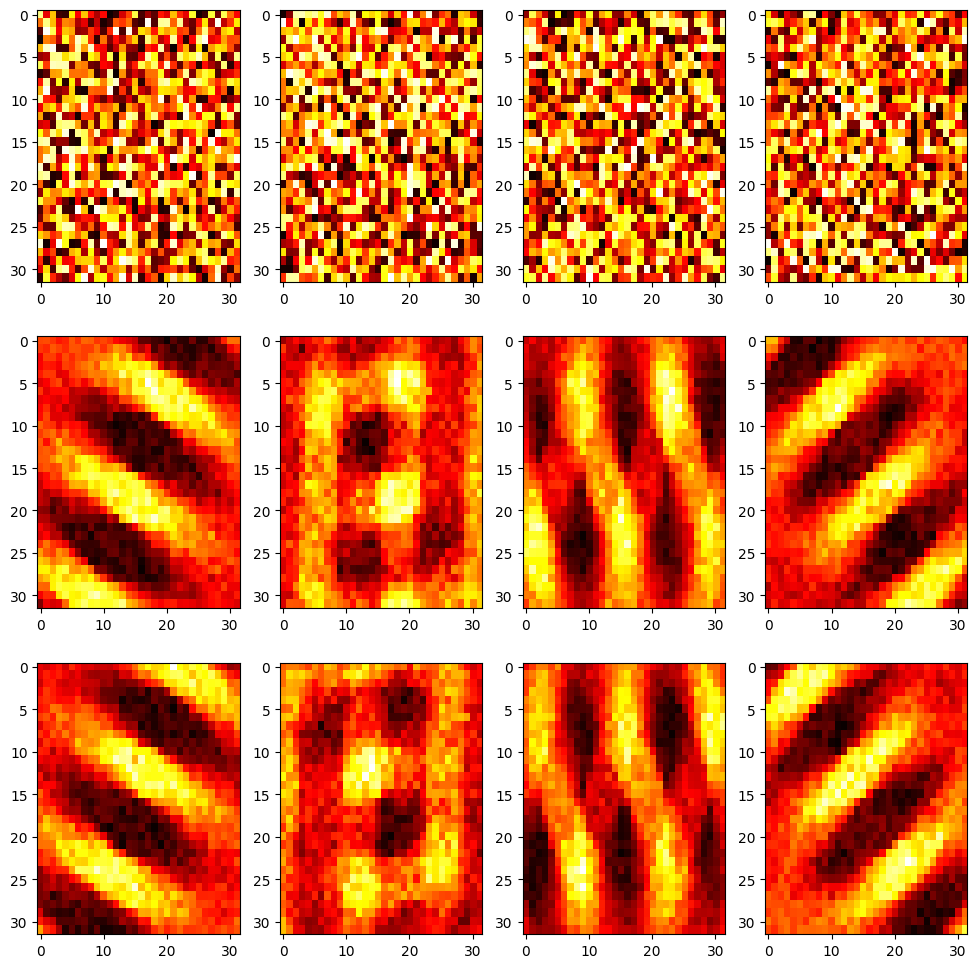

plt.figure(figsize=(12, 12))

plt.title('Feedforward weights before and after learning')

for i in range(nb_neuron):

plt.subplot(3, nb_neuron, i+1)

plt.imshow((np.array(w_on_start[i])).reshape((32,32)), aspect='auto', cmap='hot')

plt.subplot(3, nb_neuron, nb_neuron + i +1)

plt.imshow((np.array(w_on_end[i])).reshape((32,32)), aspect='auto', cmap='hot')

plt.subplot(3, nb_neuron, 2*nb_neuron + i +1)

plt.imshow((np.array(w_off_end[i])).reshape((32,32)), aspect='auto', cmap='hot')

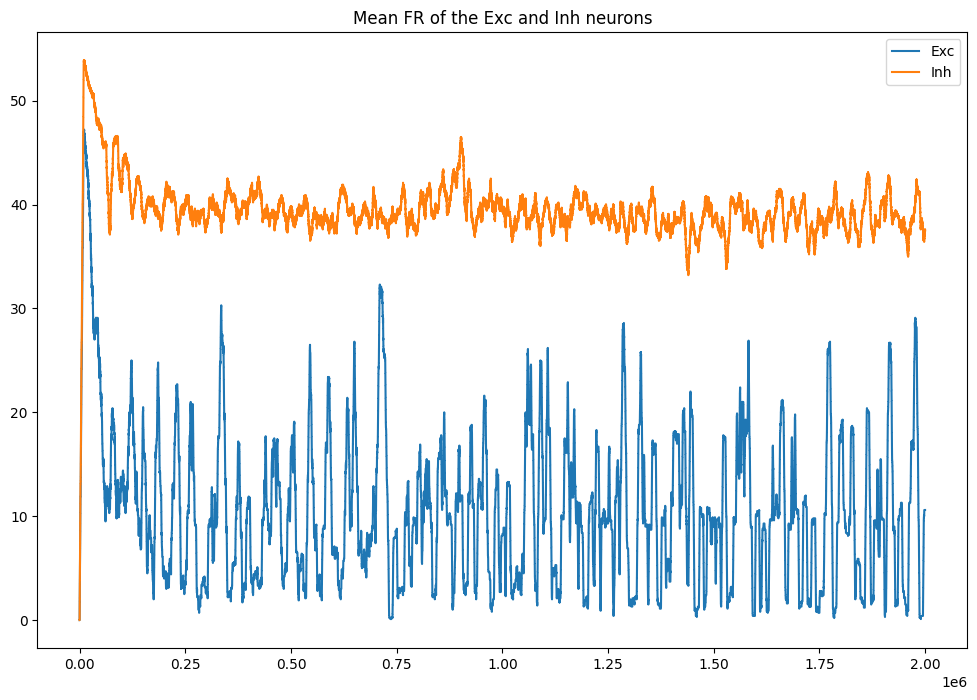

plt.figure(figsize=(12, 8))

plt.plot(datae[:, 0], label='Exc')

plt.plot(datai[:, 0], label='Inh')

plt.title('Mean FR of the Exc and Inh neurons')

plt.legend()

plt.figure(figsize=(12, 8))

plt.subplot(121)

plt.imshow(np.array(dataw, dtype='float').T, aspect='auto', cmap='hot')

plt.title('Timecourse of feedforward weights')

plt.colorbar()

plt.subplot(122)

plt.imshow(np.array(datal, dtype='float').T, aspect='auto', cmap='hot')

plt.title('Timecourse of inhibitory weights')

plt.colorbar()

plt.show()