#!pip install ANNarchyPyNN and Brian examples

import numpy as np

import matplotlib.pyplot as plt

import ANNarchy as ann

ann.setup(dt=0.1)ANNarchy 4.8 (4.8.2) on darwin (posix).IF_curr_alpha

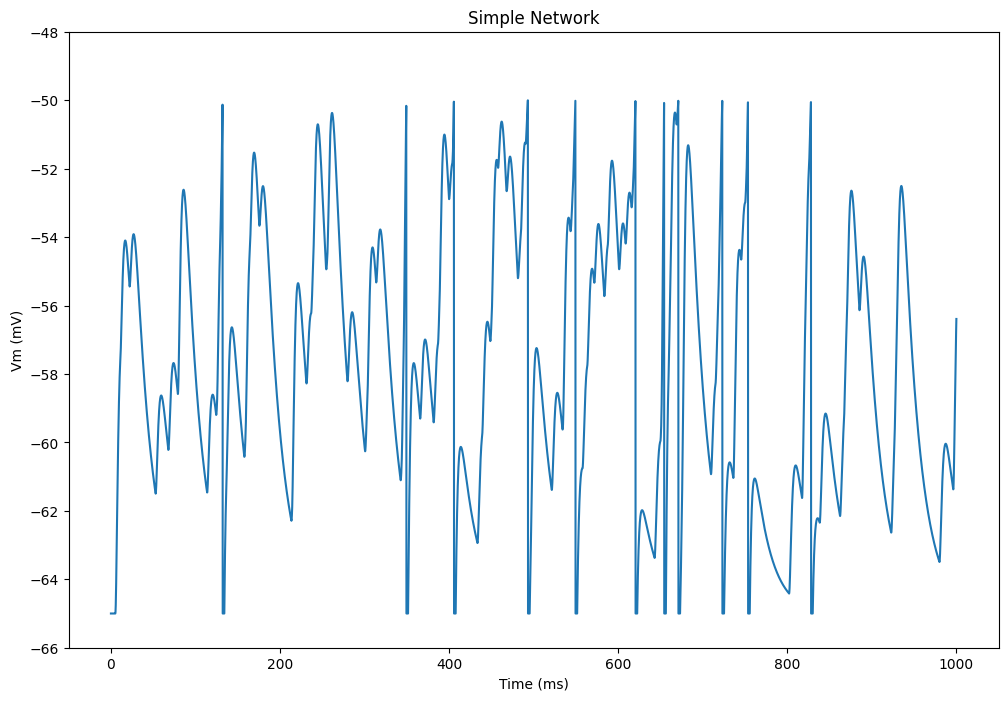

Simple network with a Poisson spike source projecting to a pair of IF_curr_alpha neurons.

This is a reimplementation of the PyNN example:

http://www.neuralensemble.org/trac/PyNN/wiki/Examples/simpleNetwork

# Parameters

tstop = 1000.0

rate = 100.0

# Create the Poisson spikes

number = int(2*tstop*rate/1000.0)

np.random.seed(26278342)

spike_times = np.add.accumulate(np.random.exponential(1000.0/rate, size=number))

# Input population

inp = ann.SpikeSourceArray(list(spike_times))

# Output population

pop = ann.Population(2, ann.IF_curr_alpha)

pop.tau_refrac = 2.0,

pop.v_thresh = -50.0,

pop.tau_syn_E = 2.0,

pop.tau_syn_I = 2.0

# Excitatory projection

proj = ann.Projection(inp, pop, 'exc')

proj.connect_all_to_all(weights=1.0)

# Monitor

m = ann.Monitor(pop, ['spike', 'v'])

# Compile the network

net = ann.Network()

net.add([inp, pop, proj, m])

net.compile()

# Simulate

net.simulate(tstop)

data = net.get(m).get()

# Plot the results

plt.figure(figsize=(12, 8))

plt.plot(ann.dt()*np.arange(tstop/ann.dt()), data['v'][:, 0])

plt.xlabel('Time (ms)')

plt.ylabel('Vm (mV)')

plt.ylim([-66.0, -48.0])

plt.title('Simple Network')

plt.show()Compiling network 1... OK

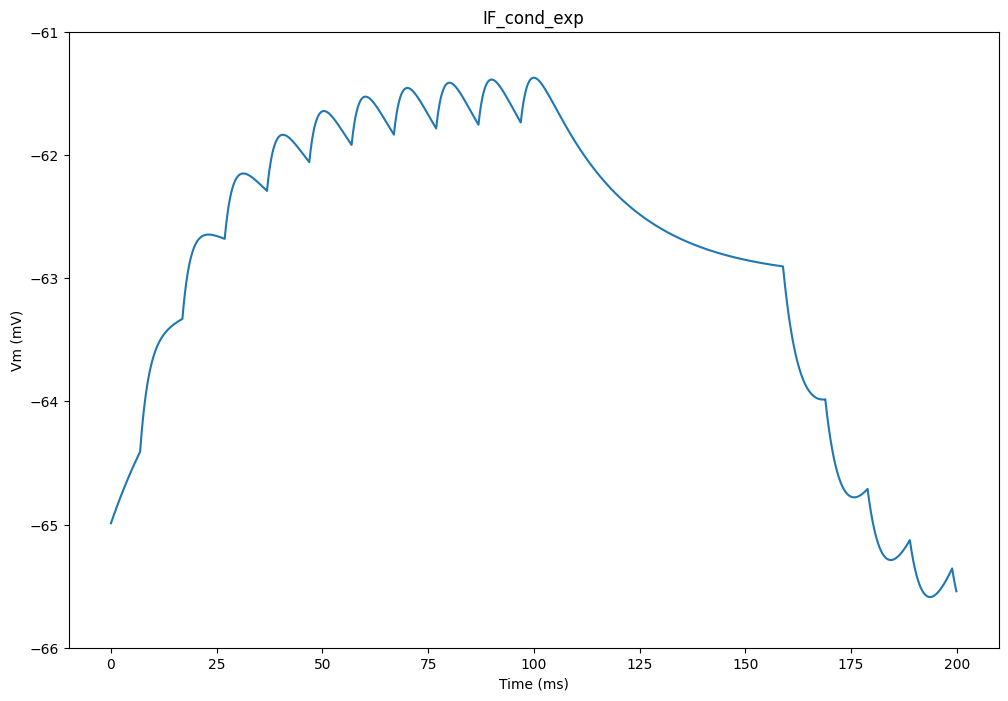

IF_cond_exp

A single IF neuron with exponential, conductance-based synapses, fed by two spike sources.

This is a reimplementation of the PyNN example:

http://www.neuralensemble.org/trac/PyNN/wiki/Examples/IF_cond_exp

# Parameters

tstop = 200.0

# Input populations with predetermined spike times

spike_sourceE = ann.SpikeSourceArray(spike_times= [float(i) for i in range(5,105,10)] )

spike_sourceI = ann.SpikeSourceArray(spike_times= [float(i) for i in range(155,255,10)])

# Population with one IF_cond_exp neuron

ifcell = ann.Population(1, ann.IF_cond_exp)

ifcell.set(

{ 'i_offset' : 0.1, 'tau_refrac' : 3.0,

'v_thresh' : -51.0, 'tau_syn_E' : 2.0,

'tau_syn_I': 5.0, 'v_reset' : -70.0,

'e_rev_E' : 0., 'e_rev_I' : -80.0 } )

# Projections

connE = ann.Projection(spike_sourceE, ifcell, 'exc')

connE.connect_all_to_all(weights=0.006, delays=2.0)

connI = ann.Projection(spike_sourceI, ifcell, 'inh')

connI.connect_all_to_all(weights=0.02, delays=4.0)

# Monitor

m = ann.Monitor(ifcell, ['spike', 'v'])

# Compile the network

net = ann.Network()

net.add([spike_sourceE, spike_sourceI, ifcell, connE, connI, m])

net.compile()

# Simulate

net.simulate(tstop)

data = net.get(m).get()

# Show the result

plt.figure(figsize=(12, 8))

plt.plot(ann.dt()*np.arange(tstop/ann.dt()), data['v'][:, 0])

plt.xlabel('Time (ms)')

plt.ylabel('Vm (mV)')

plt.ylim([-66.0, -61.0])

plt.title('IF_cond_exp')

plt.show()Compiling network 1... OK

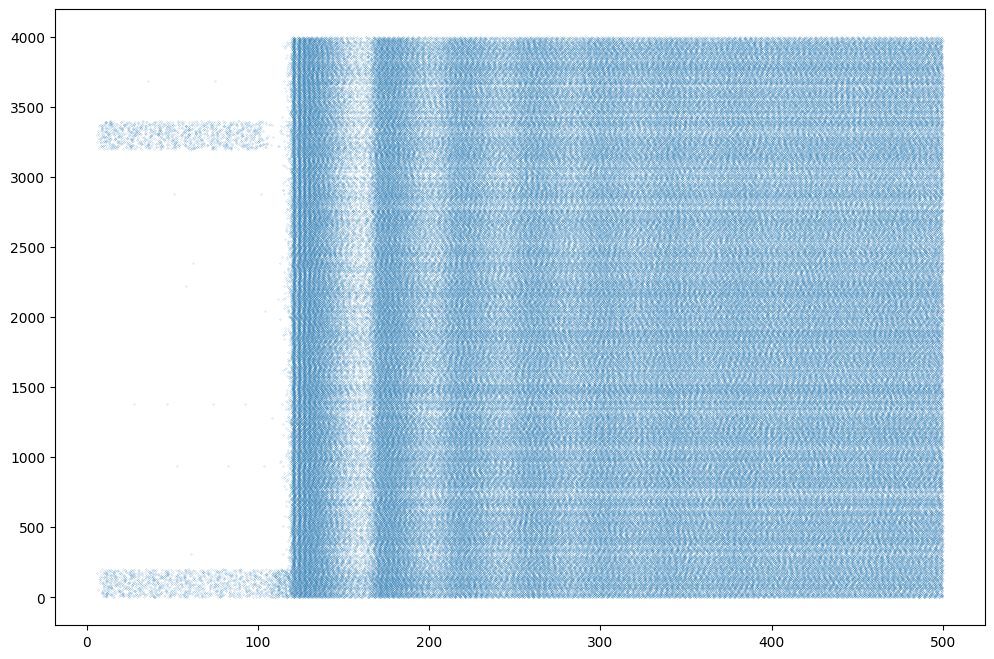

EIF_cond_exp

Network of EIF neurons with exponentially decreasing conductance-based synapses.

This is a reimplementation of the Brian example:

http://brian.readthedocs.org/en/1.4.1/examples-misc_expIF_network.html

EIF = ann.Neuron(

parameters = """

v_rest = -70.0 : population

cm = 0.2 : population

tau_m = 10.0 : population

tau_syn_E = 5.0 : population

tau_syn_I = 10.0 : population

e_rev_E = 0.0 : population

e_rev_I = -80.0 : population

delta_T = 3.0 : population

v_thresh = -55.0 : population

v_reset = -70.0 : population

v_spike = -20.0 : population

""",

equations="""

dv/dt = (v_rest - v + delta_T * exp( (v-v_thresh)/delta_T) )/tau_m + ( g_exc * (e_rev_E - v) + g_inh * (e_rev_I - v) )/cm

tau_syn_E * dg_exc/dt = - g_exc

tau_syn_I * dg_inh/dt = - g_inh

""",

spike = """

v > v_spike

""",

reset = """

v = v_reset

""",

refractory = 2.0

)

# Poisson inputs

i_exc = ann.PoissonPopulation(geometry=200, rates="if t < 200.0 : 2000.0 else : 0.0")

i_inh = ann.PoissonPopulation(geometry=200, rates="if t < 100.0 : 2000.0 else : 0.0")

# Main population

P = ann.Population(geometry=4000, neuron=EIF)

# Subpopulations

Pe = P[:3200]

Pi = P[3200:]

# Projections

we = 1.5 / 1000.0 # excitatory synaptic weight

wi = 2.5 * we # inhibitory synaptic weight

Ce = ann.Projection(Pe, P, 'exc')

Ce.connect_fixed_probability(weights=we, probability=0.05)

Ci = ann.Projection(Pi, P, 'inh')

Ci.connect_fixed_probability(weights=wi, probability=0.05)

Ie = ann.Projection(i_exc, P[:200], 'exc') # inputs to excitatory cells

Ie.connect_one_to_one(weights=we)

Ii = ann.Projection(i_inh, P[3200:3400], 'exc')# inputs to inhibitory cells

Ii.connect_one_to_one(weights=we)

# Initialization of variables

P.v = -70.0 + 10.0 * np.random.rand(P.size)

P.g_exc = (np.random.randn(P.size) * 2.0 + 5.0) * we

P.g_inh = (np.random.randn(P.size) * 2.0 + 5.0) * wi

# Monitor

m = ann.Monitor(P, 'spike')

# Compile the Network

net = ann.Network()

net.add([P, i_exc, i_inh, Ce, Ci, Ie, Ii, m])

net.compile()

# Simulate

net.simulate(500.0, measure_time=True)

# Retrieve recordings

data = net.get(m).get()

t, n = net.get(m).raster_plot(data['spike'])

plt.figure(figsize=(12, 8))

plt.plot(t, n, '.', markersize=0.2)

plt.show()Compiling network 3... OK

Simulating 0.5 seconds of the network 3 took 1.0771634578704834 seconds.

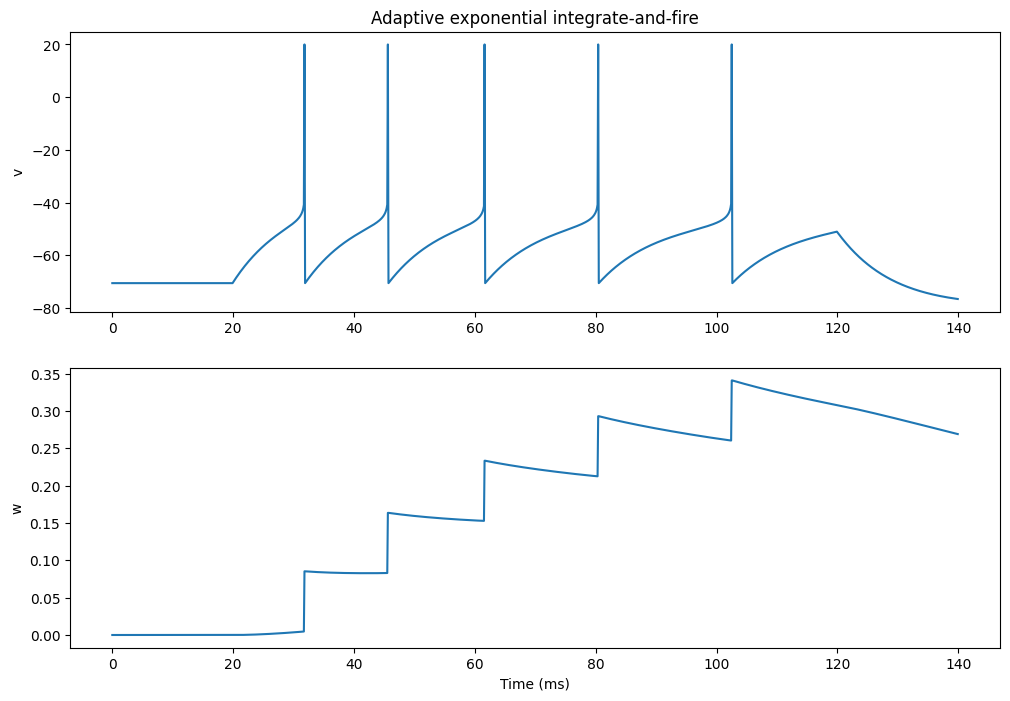

AEIF_cond_exp

Adaptive exponential integrate-and-fire model.

http://www.scholarpedia.org/article/Adaptive_exponential_integrate-and-fire_model

Model introduced in:

Brette R. and Gerstner W. (2005), Adaptive Exponential Integrate-and-Fire Model as an Effective Description of Neuronal Activity, J. Neurophysiol. 94: 3637 - 3642.

This is a reimplementation of the Brian example:

https://brian.readthedocs.io/en/stable/examples-frompapers_Brette_Gerstner_2005.html

# Create a population with one AdEx neuron

pop = ann.Population(geometry=1, neuron=ann.EIF_cond_exp_isfa_ista)

# Regular spiking (paper)

pop.tau_w, pop.a, pop.b, pop.v_reset = 144.0, 4.0, 0.0805, -70.6

# Bursting

#pop.tau_w, pop.a, pop.b, pop.v_reset = 20.0, 4.0, 0.5, pop.v_thresh + 5.0

# Fast spiking

#pop.tau_w, pop.a, pop.b, pop.v_reset = 144.0, 2000.0*pop.cm/144.0, 0.0, -70.6

# Monitor

m = ann.Monitor(pop, ['spike', 'v', 'w'])

# Compile the network

net = ann.Network()

net.add([pop, m])

net.compile()

# Add current of 1 nA and simulate

net.simulate(20.0)

net.get(pop).i_offset = 1.0

net.simulate(100.0)

net.get(pop).i_offset = 0.0

net.simulate(20.0)

# Retrieve the results

data = net.get(m).get()

spikes = data['spike'][0]

v = data['v'][:, 0]

w = data['w'][:, 0]

if len(spikes)>0:

v[spikes] = 20.0

# Plot the activity

plt.figure(figsize=(12, 8))

plt.subplot(2,1,1)

plt.plot(ann.dt()*np.arange(140.0/ann.dt()), v)

plt.ylabel('v')

plt.title('Adaptive exponential integrate-and-fire')

plt.subplot(2,1,2)

plt.plot(ann.dt()*np.arange(140.0/ann.dt()), w)

plt.xlabel('Time (ms)')

plt.ylabel('w')

plt.show()Compiling network 4... OK

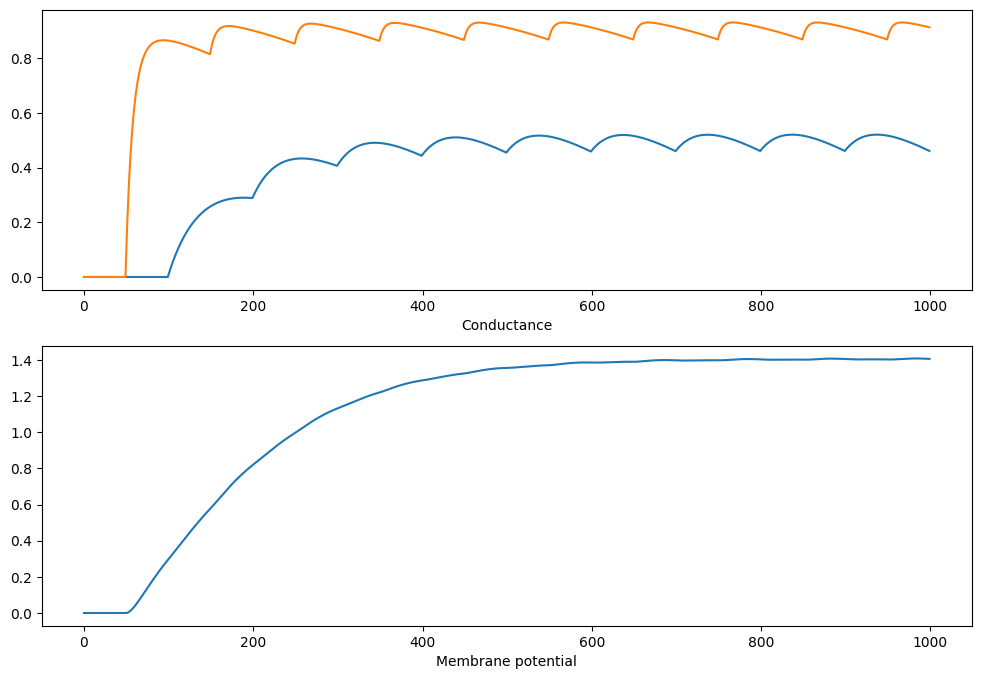

Non-linear synapses

A single IF neuron with two non-linear NMDA synapses.

This is a reimplementation of the Brian example:

http://brian.readthedocs.org/en/latest/examples-synapses_nonlinear_synapses.html

# Neurons

Linear = ann.Neuron(equations="dv/dt = 0.1", spike="v>1.0", reset="v=0.0")

Integrator = ann.Neuron(equations="dv/dt = 0.1*(g_exc -v)", spike="v>2.0", reset="v=0.0")

# Non-linear synapse

NMDA = ann.Synapse(

parameters = """

tau = 10.0 : projection

""",

equations = """

tau * dx/dt = -x

tau * dg/dt = -g + x * (1 -g)

""",

pre_spike = "x += w",

psp = "g"

)

# Populations

input = ann.Population(geometry=2, neuron=Linear)

input.v = [0.0, 0.5]

pop = ann.Population(geometry=1, neuron=Integrator)

# Projection

proj = ann.Projection(

pre=input, post=pop, target='exc',

synapse=NMDA)

proj.connect_from_matrix(weights=[[1.0, 10.0]])

# Monitors

m = ann.Monitor(pop, 'v')

w = ann.Monitor(proj, 'g')

# Compile the network

net = ann.Network()

net.add([input, pop, proj, m, w])

net.compile()

# Simulate for 100 ms

net.simulate(100.0)

# Retrieve recordings

v = net.get(m).get('v')[:, 0]

s = net.get(w).get('g')[:, 0, :]

# Plot the recordings

plt.figure(figsize=(12, 8))

plt.subplot(2,1,1)

plt.plot(s[:, 0])

plt.plot(s[:, 1])

plt.xlabel("Time (ms)")

plt.xlabel("Conductance")

plt.subplot(2,1,2)

plt.plot(v)

plt.xlabel("Time (ms)")

plt.xlabel("Membrane potential")

plt.show()WARNING: Monitor(): it is a bad idea to record synaptic variables of a projection at each time step!

WARNING: Monitor(): it is a bad idea to record synaptic variables of a projection at each time step!

Compiling network 5... OK

STP

Network (CUBA) with short-term synaptic plasticity for excitatory synapses (depressing at long timescales, facilitating at short timescales).

Adapted from :

https://brian.readthedocs.io/en/stable/examples-synapses_short_term_plasticity2.html

duration = 1000.0

LIF = ann.Neuron(

parameters = """

tau_m = 20.0 : population

tau_e = 5.0 : population

tau_i = 10.0 : population

E_rest = -49.0 : population

E_thresh = -50.0 : population

E_reset = -60.0 : population

""",

equations = """

tau_m * dv/dt = E_rest -v + g_exc - g_inh

tau_e * dg_exc/dt = -g_exc

tau_i * dg_inh/dt = -g_inh

""",

spike = "v > E_thresh",

reset = "v = E_reset"

)

STP = ann.Synapse(

parameters = """

tau_rec = 200.0 : projection

tau_facil = 20.0 : projection

U = 0.2 : projection

""",

equations = """

dx/dt = (1 - x)/tau_rec : init = 1.0, event-driven

du/dt = (U - u)/tau_facil : init = 0.2, event-driven

""",

pre_spike="""

g_target += w * u * x

x *= (1 - u)

u += U * (1 - u)

"""

)

# Population

P = ann.Population(geometry=4000, neuron=LIF)

P.v = ann.Uniform(-60.0, -50.0)

Pe = P[:3200]

Pi = P[3200:]

# Projections

con_e = ann.Projection(pre=Pe, post=P, target='exc', synapse = STP)

con_e.connect_fixed_probability(weights=1.62, probability=0.02)

con_i = ann.Projection(pre=Pi, post=P, target='inh')

con_i.connect_fixed_probability(weights=9.0, probability=0.02)

# Monitor

m = ann.Monitor(P, 'spike')

# Compile the network

net = ann.Network()

net.add([P, con_e, con_i, m])

net.compile()

# Simulate without plasticity

net.simulate(duration, measure_time=True)

data = net.get(m).get()

t, n = net.get(m).raster_plot(data['spike'])

rates = net.get(m).population_rate(data['spike'], 5.0)

print('Total number of spikes: ' + str(len(t)))

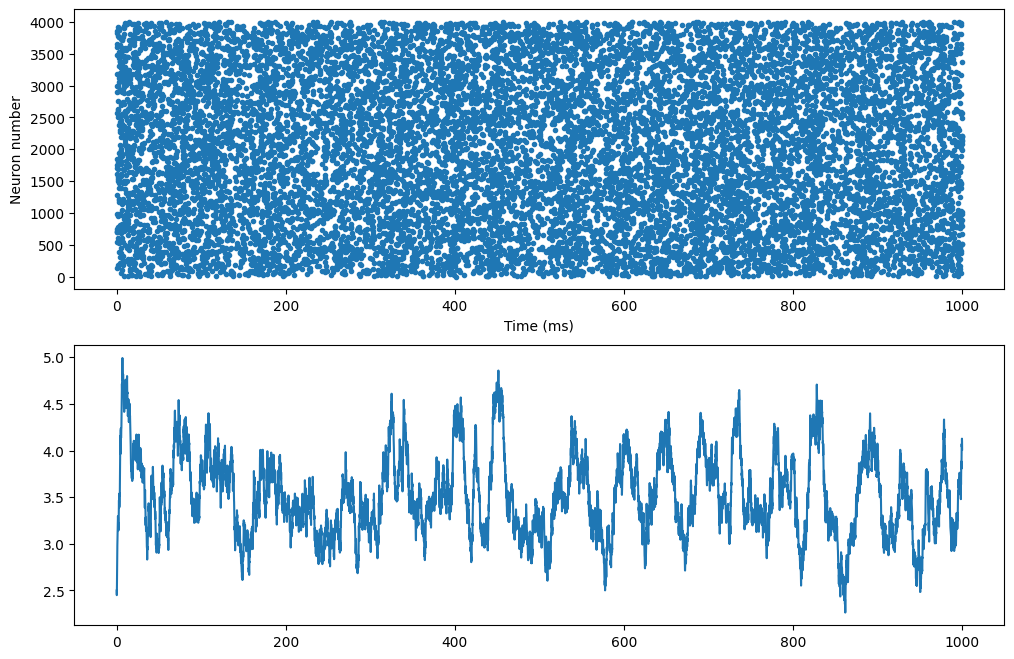

plt.figure(figsize=(12, 8))

plt.subplot(211)

plt.plot(t, n, '.')

plt.xlabel('Time (ms)')

plt.ylabel('Neuron number')

plt.subplot(212)

plt.plot(np.arange(rates.size)*ann.dt(), rates)

plt.show()Compiling network 6... OK

Simulating 1.0 seconds of the network 6 took 0.31792426109313965 seconds.

Total number of spikes: 14115